Link Prediction with GNNs and Text Features

Using text features generated from passing review texts into a Language Model, to predict the links between reviewers using Graph Neural Networks (GNNs)

Motivations

I was reading about Graph Neural Networks (GNNs), and I came across Cora, a citation network dataset of scientific publications, which included Bag-of-Words(BoW) word embeddings as node features for training GNNs on the Cora dataset.

In 2021, I worked on a similar dataset on metal music reviews, where I defined two users (i.e. reviewers) to be connected if they have reviewed a common album (See Here). I was interested to find out whether this metal music review dataset could similarly be used to train GNNs.

Methodology

I wondered if a GNN would be useful in predicting whether two users were likely to review a same album (and hence be related), when using text embeddings extracted from all review texts generated by each reviewer (hence forming a profile of each reviewer). I experimented training 2 GNNs, seperately using both BoW embeddings based on word frequencies, and paragraph embeddings based on the hidden states of a language model.

For the language model, I used a MiniLM model, because of its smaller number of parameters, and the fact that it was trained on social media data, which works well for reviews. For each reviewer (i.e. user), I concatenated all of the review texts generated by that specific user. Thereafter, I ran the language model on all the user reviews, and for each user, I extracted the hidden states after the forward pass to use as a “summary” text embedding vector for each user, representing each user’s “profile”. I froze the language model(LM) parameters to not backpropagate through the entire LM to save computation.

The GNN model I used was a simple one, comprising of graph convolutions, graph attention, layer normalizations and negative sampling. Using the same initial GNN model, I separately trained the model on both BoW and MiniLM text embeddings.

Findings

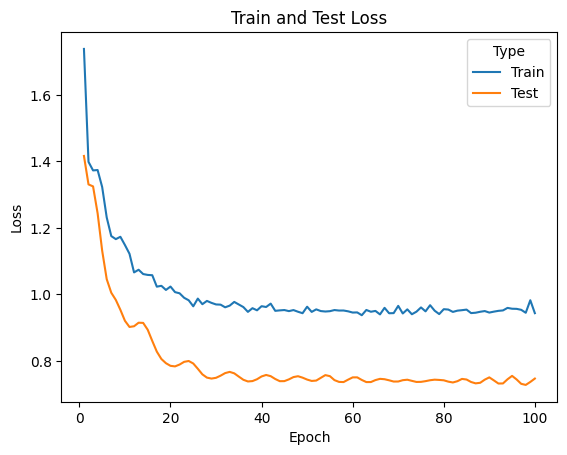

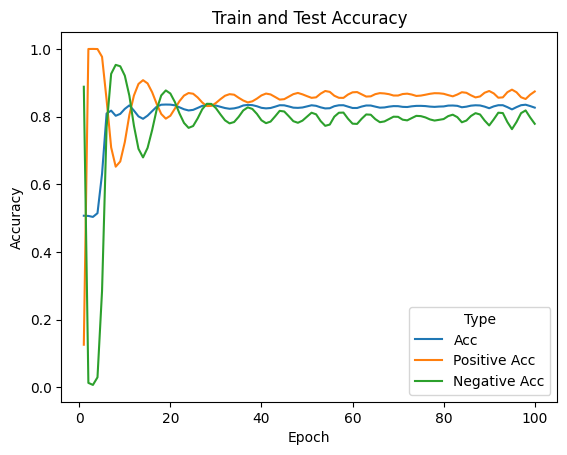

Both the BoW and MiniLM embeddings obtained similar accuracy of around 85% for link prediction. This is compared to the baseline of 50% for random guessing, as I sampled the same number of negative edges as the number of positive edges.

I also noted that adding graph Laplacian regularization greatly helped reduce overfitting and improve accuracy, from 83% to 85%. The graph Laplacian regularization imposes a penalty on edges with the same ground-truth labels but different predicted labels, and large differences in vector components.